Preamble

As your self-hosted AI stack grows, you might want to use an LLM toolkit to easily manage services. One such tool is harbor. This toolkit allows you to easily install, manage and integrate Docker Compose stacks with simple CLI commands or an optional GUI.

Installing an LLM Stack With Harbor

Install The Necessary Dependencies

Git

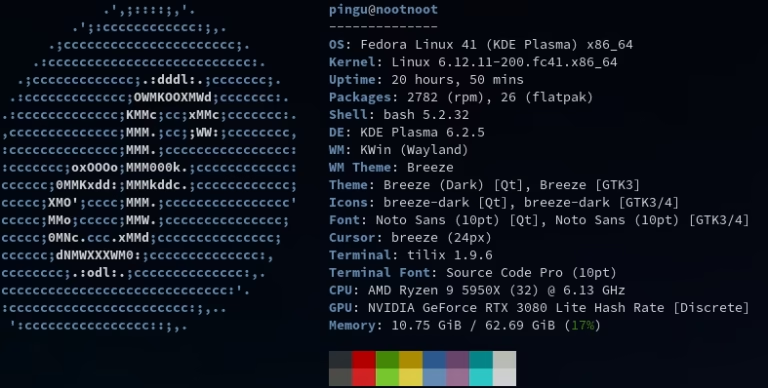

Fedora

sudo dnf install git

Debian

sudo apt install git

Arch

sudo pacman -S git

Docker and Docker Compose

Follow Docker’s official install documentation for your distribution here

NVIDIA Container Toolkit (Optional)

NVIDIA Container Toolkit is required for CUDA support.

Fedora

- Add NVIDIA’s Repository

curl -s -L https://nvidia.github.io/libnvidia-container/stable/rpm/nvidia-container-toolkit.repo | \

sudo tee /etc/yum.repos.d/nvidia-container-toolkit.repo

- (optional) Enable Experimental Packages

sudo yum-config-manager --enable nvidia-container-toolkit-experimental

- Install the NVIDIA Container Toolkit

sudo yum install -y nvidia-container-toolkit

Debian

Add NVIDIA’s production repository

curl -fsSL https://nvidia.github.io/libnvidia-container/gpgkey | sudo gpg --dearmor -o /usr/share/keyrings/nvidia-container-toolkit-keyring.gpg \

&& curl -s -L https://nvidia.github.io/libnvidia-container/stable/deb/nvidia-container-toolkit.list | \

sed 's#deb https://#deb [signed-by=/usr/share/keyrings/nvidia-container-toolkit-keyring.gpg] https://#g' | \

sudo tee /etc/apt/sources.list.d/nvidia-container-toolkit.list

(optional) Enable experimental packages

sed -i -e '/experimental/ s/^#//g' /etc/apt/sources.list.d/nvidia-container-toolkit.list

Install the NVIDIA Container Toolkit

sudo apt-get update && sudo apt-get install -y nvidia-container-toolkit

Arch

Install the NVIDIA Container Toolkit

sudo pacman -S nvidia-container-toolkit

Configure Docker

sudo nvidia-ctk runtime configure --runtime=docker

Restart the Docker Daemon

sudo systemctl restart docker

Add User to Docker Group

- Create docker Group

sudo groupadd docker

- Add user to the docker group

sudo usermod -aG docker $USER

- Log out and in then verify that docker runs without root

docker run hello-world

If docker still asks for root permissions, verify docker group status with

groups $USER

and restart.

Install Harbor CLI

bash

You can use the unsafe one liner to install Harbor. Ensure you review the code first.

curl https://av.codes/get-harbor.sh | bash

Optionally you can install Harbor with a package manager

npm

npm install -g @avcodes/harbor

PyPI

pipx install llm-harbor

Install Harbor App (optional)

If you’re running a Desktop Environment, a GUI app is available from the release pages, however it is still relatively new and may not behave as expected. If you are on Debian, you can install the latest .deb package. Download the latest version and install it with dpkg

sudo dpkg -i /path/to/Harbor_0.2.25_amd64.deb

If you’re using a non-debian based distro, you can install the appimage either manually or using a tool like bauh or appimagelauncher.

To install manually, download the latest version then change permissions to make it executable.

sudo chmod u+x /path/to/Harbor_0.2.25_amd64.AppImage

You can then launch the application

/path/to/Harbor_0.2.25_amd64.AppImage &

Once the GUI has launched you can free the app from the terminal with

disown

Windows and Mac OS users can use the .dmg or .exe/msi packages for their respective operating systems.

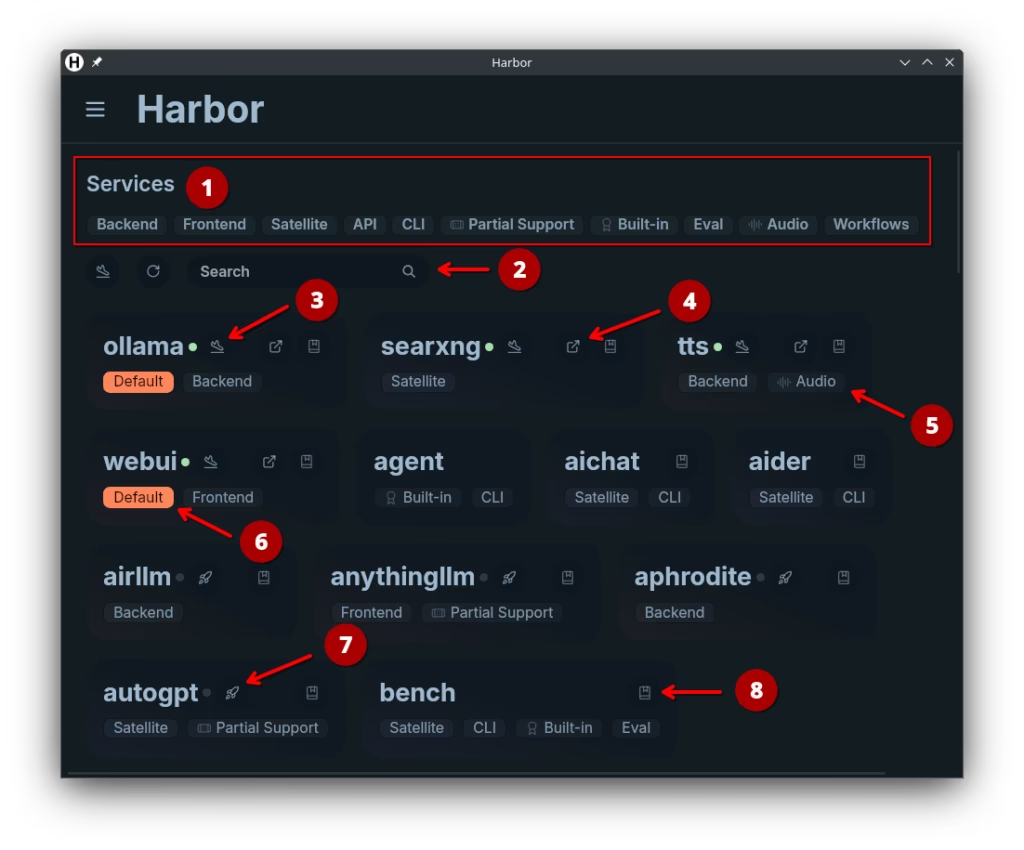

Harbor App Overview

2. Search for service name

3. Stop a service

4. Open a running service (broken in current AppImage)

5. Service type

6. Whether service is set to start by default with harbor up command

7. Start service

8. Open Documentation – Currently broken in AppImage. Right click to copy/paste URL as a workaround.

Running Open-WebUI stack with Harbor CLI

Harbor allows you to pick and choose the services and models you would like to integrate in to your AI workflow. You can refer to the Harbor user guide for a full list of commands.

Default (Open-WebUI + Ollama)

For now I want to focus on running a default stack of Open-WebUI with Ollama as the frontend and backend.

harbor up

Backends

I’ll also run a few additional inference backends

harbor up llamacpp vllm sglang ktransformers

Frontends

I’ll integrate Flux in Open-WebUI for text-to-image support

harbor up comfyui

Open http://localhost:340341 and let ComfyUI pull the Stable Diffusion models from huggingface.

Satellite Services

You can add integrate additional tools by running select satellite services. I would not advise running everything at once as tempting as it may be. Doing so will add complexity to your setup. Read through the features of each service and pick only the ones you need.

harbor up tts langflow sqlchat searxng llamacpp comfyui bolt

Each of these will require further configuration depending on what you wish to do with them which is beyond the scope of this post. Refer to their respective documentation for guidance.

Models

Deepseek has been making waves in the news lately, so time to test it out! I already tested out the r1 model in a previous post about Open-WebUI with Docker so this time I’ll test deepseek-coder, as some of my satellite services are development specific. You can pull Ollama models using harbor with “harbor ollama pull <model name>“.

harbor ollama pull deepseek-coder

You can also pull gguf models directly from huggingface for use with different inference backends

- Download

harbor hf download <user/repo> <file.gguf>

- Locate the file

harbor find file.gguf

- Set the path to the model

harbor config set llamacpp.model.specifier -m /app/models/<path/to/file.gguf>

Example:

- Download the model

for Dolphin3.0-R1-Mistral-24B

harbor hf download bartowski/cognitivecomputations_Dolphin3.0-R1-Mistral-24B-GGUF cognitivecomputations_Dolphin3.0-R1-Mistral-24B-Q4_K_M.gguf

Another model I’m interested in is a text-to-video model

harbor hf download pollockjj/ltx-video-2b-v0.9.1-gguf ltx-video-2b-v0.9.1-F16.gguf

Note: If the model is gated/private, you can set your huggingface access token with

harbor hf token <your HF token>

- Locate the model you wish to use. E.g.

harbor find ltx-video-2b-v0.9.1-F16.gguf

harbor find cognitivecomputations_Dolphin3.0-R1-Mistral-24B-Q4_K_M.gguf

- Set the path (repeat steps to switch between each model) – /app/models/hub/ is the mount point inside the container. Your model will be different. Check the output of your find command.

# switch to ltx-video

harbor llamacpp gguf /app/models/hub/models--pollockjj--ltx-video-2b-v0.9.1-gguf/snapshots/e5a475abf7bc740c6952ad5c7babee947de0907/ltx-video-2b-v0.9.1-F16.gguf

# switch to Dolphin-R1-Mistral

harbor llamacpp gguf /app/models/hub/models--bartowski--cognitivecomputations_Dolphin3.0-R1-Mistral-24B-GGUF/snapshots/6379a7231ae315b324f7f78cbe12a4ecb2794e49/cognitivecomputations_Dolphin3.0-R1-Mistral-24B-Q4_K_M.gguf

- Restart llamacpp to take effect

harbor restart llamacpp

Updating Docker Containers

Update default stack

harbor pull

Update specific service

harbor pull <service name>

Once the images are pulled, restart to take effect

harbor restart

Opening Harbor Services

You can open harbor services using the “harbor open <service>” command

harbor open webui

Next Steps

From here explore to your hearts content! Look at Harbor’s GitHub page periodically to check for new features.